Robotic research group Robo@FIT

Laboratory of Human-Robot Communication

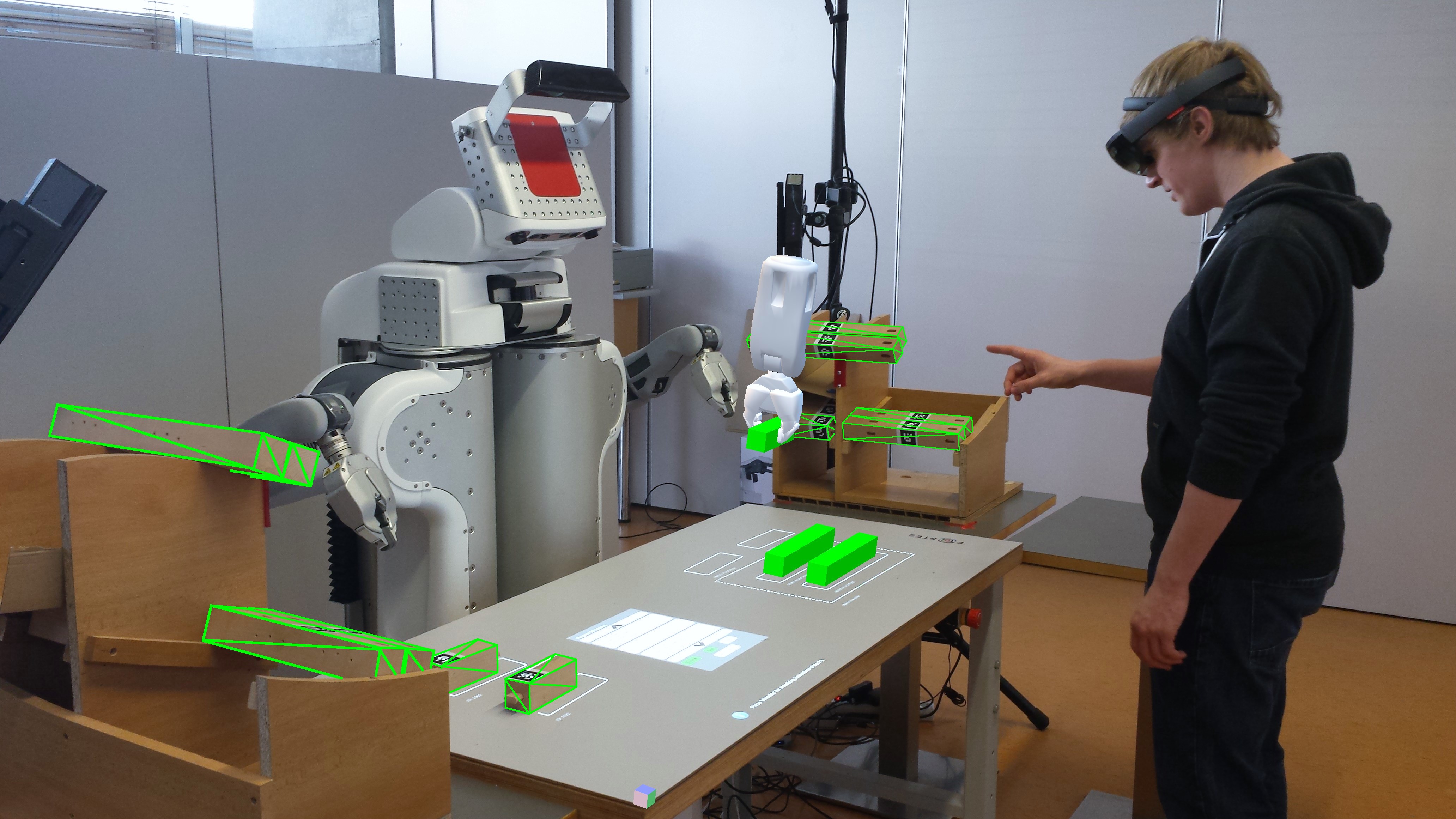

The laboratory is focused on research and experimentation in the field of human-machine communication, and currently, it focuses on human-robot interaction. The lab is spatially interconnected with a robotic lab, and ground robots are used to various experiments. The lab is further equipped with an extensive set of sensors for sensing the situation in the robot environment, positioning and movement of people and robots, operator gestures etc., as well as a number of specific imaging devices for realizing virtual and augmented reality (VR/AR glasses, Spatial AR and mobile devices).

Robots

- PR2 - Universal research robot, two arms with seven degrees of freedom (one equipped with an F/T sensor), several cameras, and two lidars.

- Dobot M1 - Industrial SCARA robot.

- Dobot Magician - A small table robot with four degrees of freedom suitable for small handling tasks.

- Niryo One - A small table robot with six degrees of freedom.

- Jetson UAV - Experimental drone.

Sensors

- Depth Sensors

- GPS Microstrain 3DM-GX3-45 - Fusion of GPS and inertial data for higher accuracy and sampling frequency, resistance to short-term signal outages.

- Eye tracker (Pupil Core) - Glasses for measuring the direction of the user's gaze.

- Emotive Epoc - Device for measuring brain activity. Usable for monitoring brain activity, analysis of environmental influences, stress events, etc.

- Empatica E4 - Professional measurement of physiological data (temperature, pulse, temperature, skin resistance) using an inconspicuous device similar to a watch.

- Stratos Explore - Device for a haptic response using ultrasonic waves. Use for research of non-traditional user interface controls.

- Others - Myo Armband, Leap motion

Mixed Reality Setups

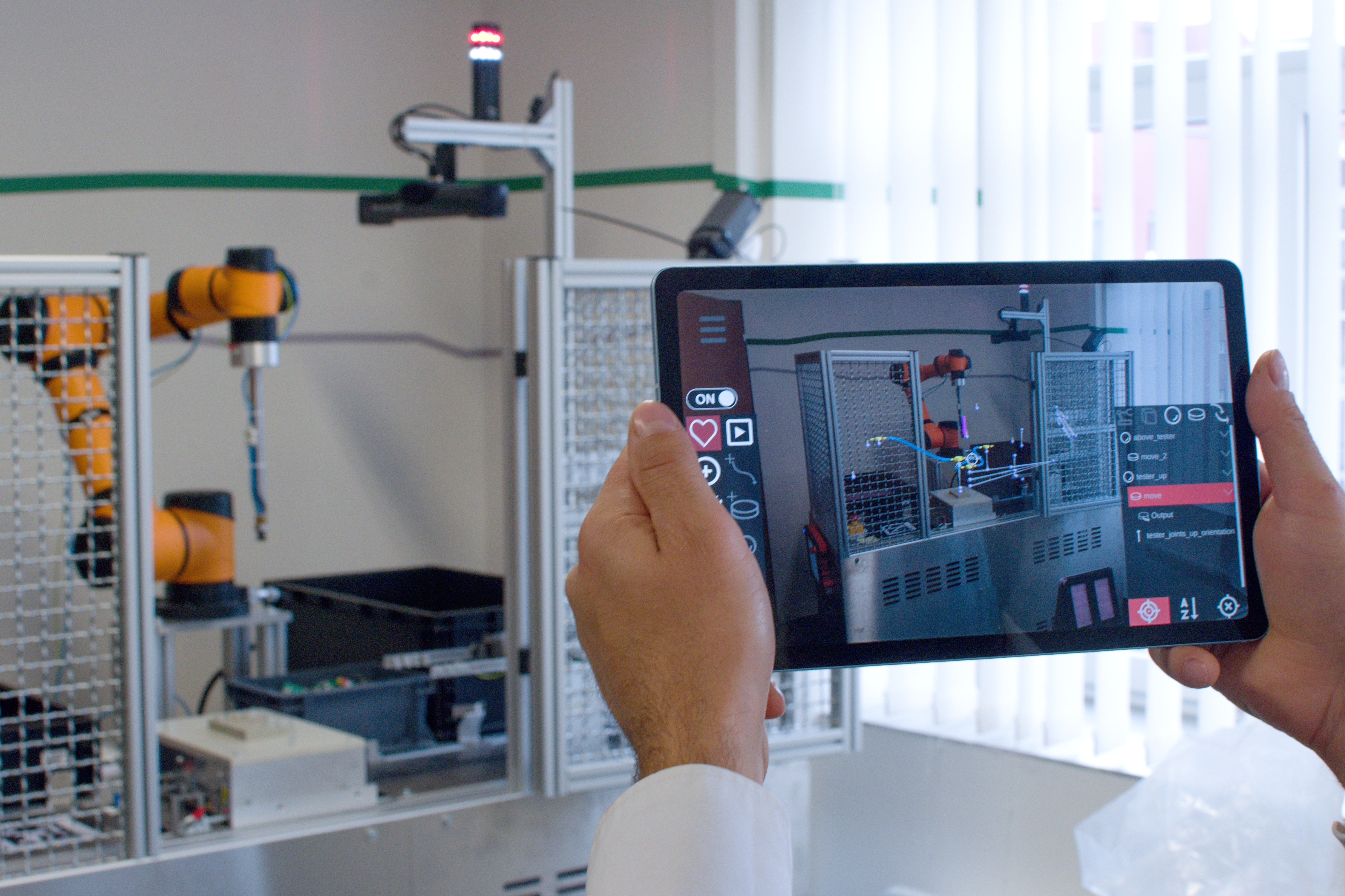

- Handheld Augmented Reality

- Tablets Samsung S6, S7 + iPads

- AREditor - developed application for robot programming, visualizations and debugging of robotic procedures, workplace annotation, multi-user cooperation. For more information, see our GitHub repository and/or video.

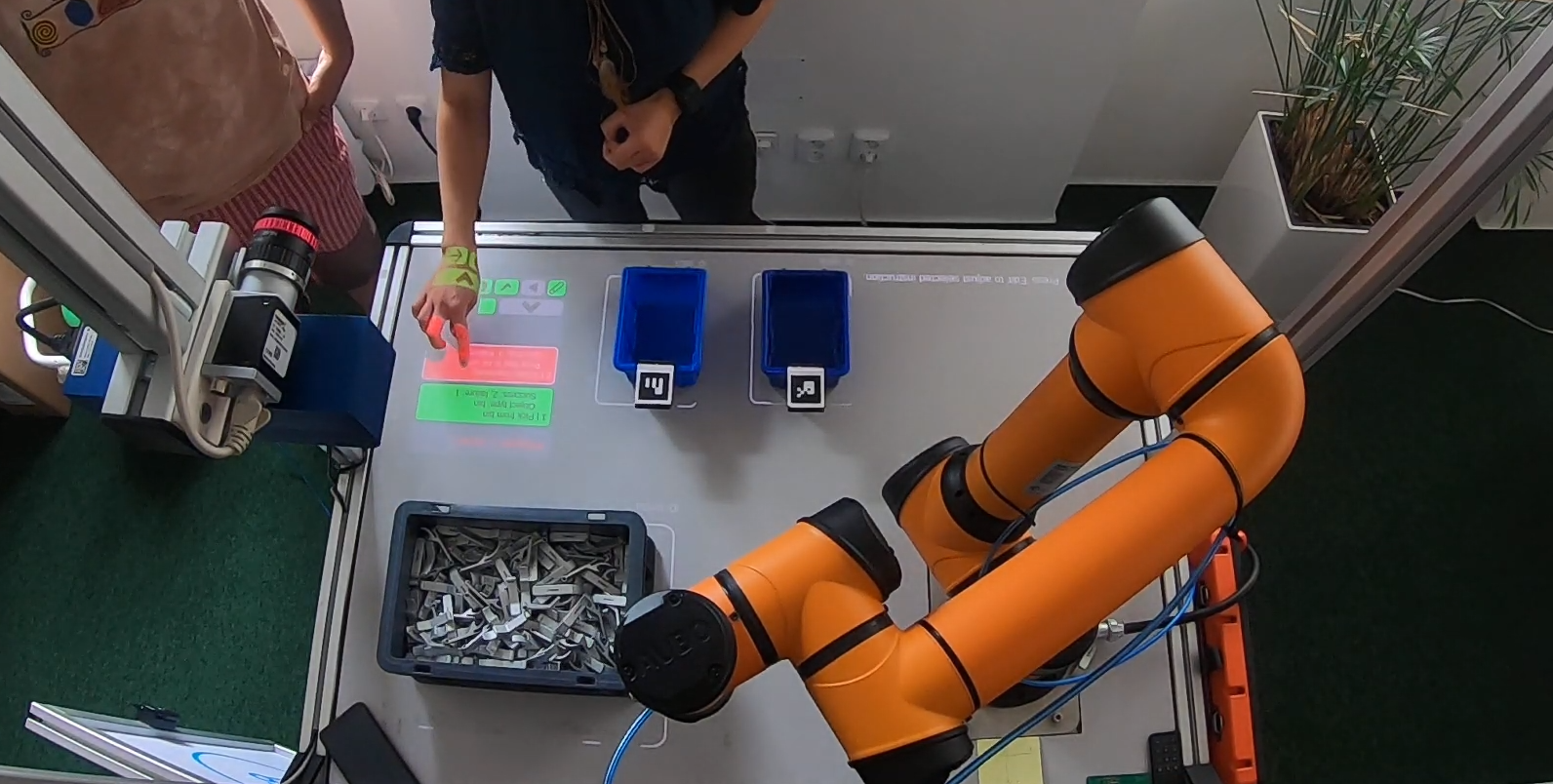

- Interactive Spatial Augmented Reality

- 2x 5000 ANSI projector, various types of smaller projectors, 2x small laser projector (can project at any angle) suitable e.g. for mounting on a robotic arm.

- 2x Hachi Infinite M1 - touch projector (integrates a camera for finger detection)

- 3 pieces of desks equipped with a projection foil and a capacitive touch layer. Workshop touch table with touch board 1500 x 700mm, demonstration workplace with board 996 x 574mm, mobile board 1000x600mm.

- A lot of holders and stands enabling rapid prototyping of various workplace configurations, sensor locations, etc.

- ARCOR (also known as ARTable) - a vision of a near-future workspace, where humans and robots may safely and effectively collaborate. Our main focus is on human-robot interaction and especially on robot programming - to make it feasible for any ordinary skilled worker. The interaction is based mainly on interactive spatial augmented reality - a combination of projection and a touch-sensitive surface. For more information see GitHub and video, another video.

- AR Head-Mounted Displays

- HoloLens (v1 a v2)

- Visualization of robotic procedures, robot programming, workplace annotation, setting collision envelopes, training of new employees, stepping programs, cooperation of multiple users, etc. Examples of our work can be seen in the video.

- Head-up display for drone pilots, telepresence.

Demonstration Modular Workplace

The modular workplace simulating the Industry 4.0 environment serves as a demonstrator of the possibilities of the ARCOR2 system, which enables visual programming in augmented reality using the AREditor application (see video). Each module is equipped with its own robot (Dobot Magician and Dobot M1) and a 3D sensor (Kinect Azure) for monitoring the workplace, robot, and components. The modules can be connected and the robots can then, for example, transfer components and thus cooperate on a more complex task. A demonstration task is prepared at the workplace, including the detection of components and their manipulation using a pair of robots. Using a tablet with the AREditor application, it is possible to view the task, control the program execution, or, for example, adapt it to a new type of component.

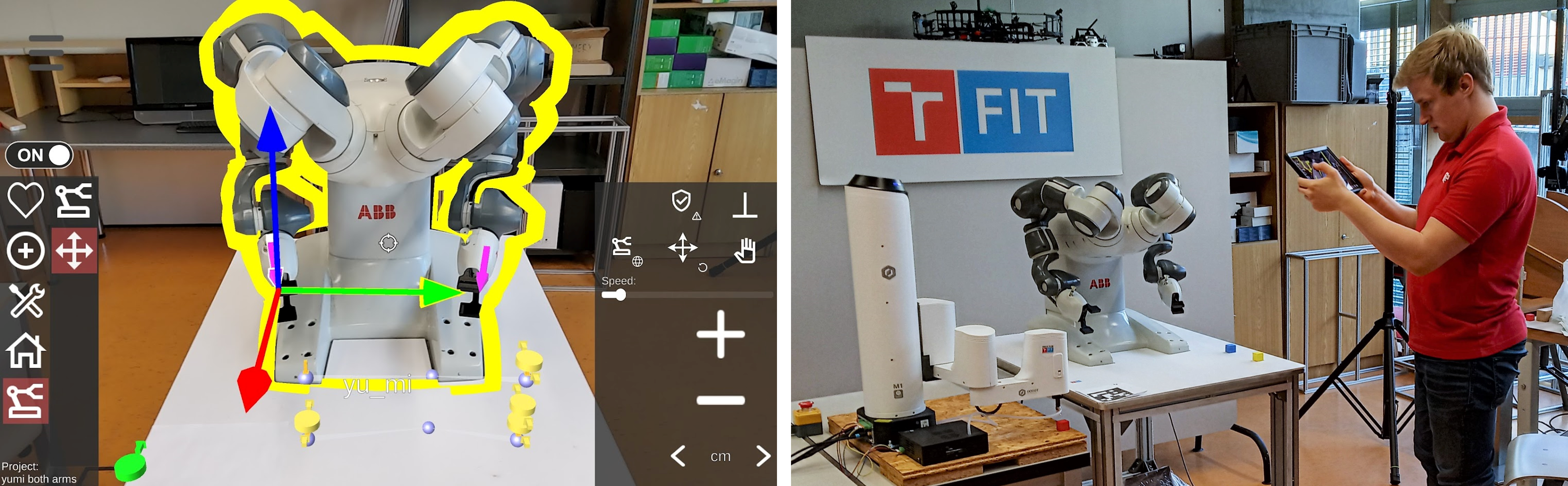

ABB YuMi

In cooperation with ABB, the integration of the borrowed two-handed collaborative robot ABB YuMi into the ARCOR2 system took place (video). A demonstration task was then prepared with the robot, demonstrating the ease of editing the program using the AREditor application and the possibility of working with a robot that has more than one arm. The inclusion of the Dobot M1 robot in the demonstration then presents the ability of the ARCOR2 system to serve as a unified environment, enabling the integration of devices (robots) from various vendors that do not need to be controlled and programmed using individual proprietary applications or PLCs.

The integration was performed in the form of creating several simple programs in RAPID language, which create a TCP server, allowing the plugin to the ARCOR2 system to communicate with the robot, for example, to read the positions of end effectors, individual joints, or send requests for movement. Furthermore, the Robot Web Services interface was used, which allows, for example, to restart the robot program or to control the state of its motors. Ready support for the YuMi robot is freely available on GitHub.